Single-Page Applications (SPAs) are powered by client-side rendering templates, which give the end user a very dynamic experience. Recently, Google announced that they crawl web pages and execute JavaScript as a normal user would, resulting in sites powered by SPA frameworks (Angular, Ember, and Vue, to name a few) being crawled without Google penalty.

Beyond search, other web crawlers are important to your site’s visibility—namely rich social-sharing robots that rely on meta tags are still blind to JavaScript.

In this tutorial, we will build an alternate route and rendering module for your Express and Node.js server that you can use with most SPA frameworks and that will enable your site to have rich sharing on Twitter, Facebook and Pinterest.

A Word of Warning

This tutorial deals exclusively with web robots that extract social sharing information. Do not attempt this technique with search engine web crawlers. Search engine companies can take this kind of behavior seriously and treat it as spam or fraudulent behavior, and as such your rankings may quickly tank.

Similarly, with rich social-sharing information, make sure you are representing content in a way that aligns what the user sees with what the robot reads. Failing to keep this alignment could result in restrictions from social media sites.

Rich Social Sharing

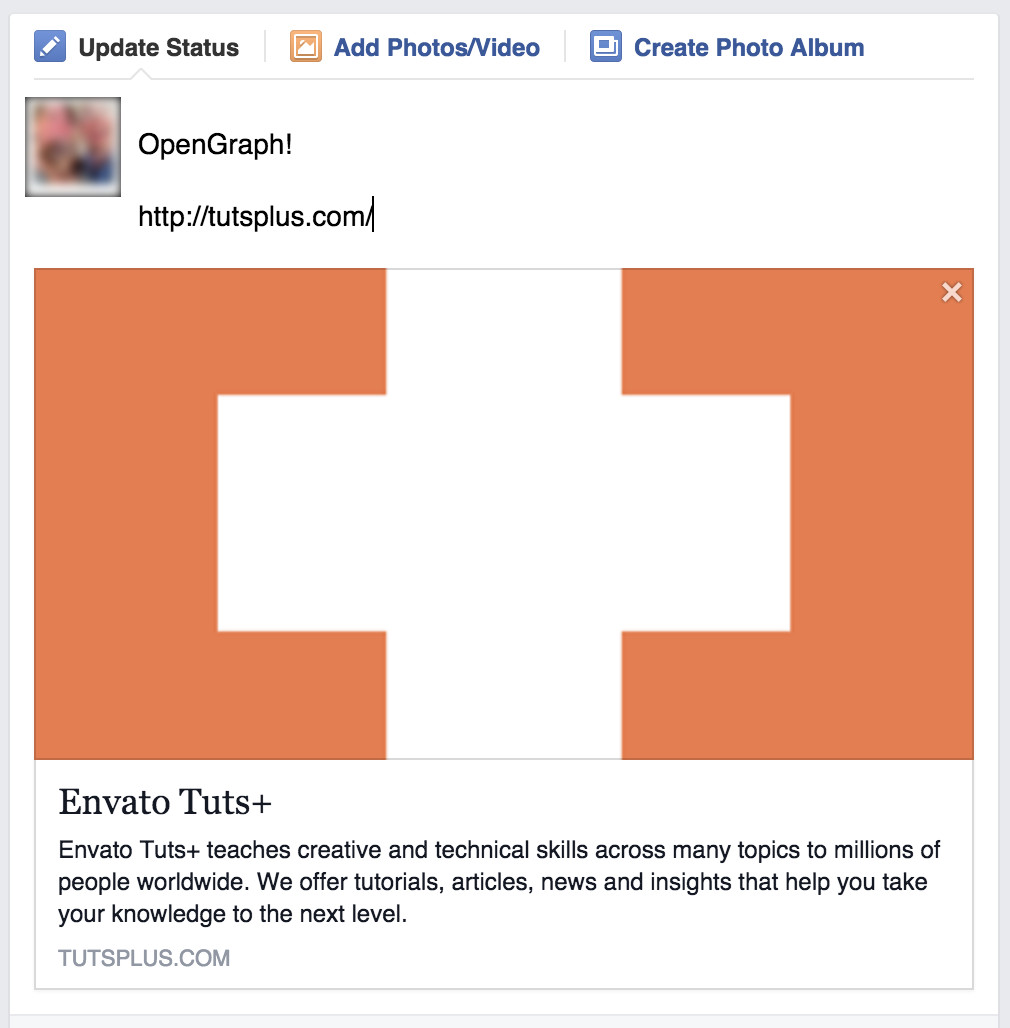

If you post an update in Facebook and include a URL, a Facebook robot will read the HTML and look for OpenGraph meta tags. Here is an example of the Envato Tuts+ homepage:

Inspecting the page, in the head tag, here are the relevant tags that generate this preview:

<meta content="Envato Tuts+" property="og:title"> <meta content="Envato Tuts+ teaches creative and technical skills across many topics to millions of people worldwide. We offer tutorials, articles, news and insights that help you take your knowledge to the next level." property="og:description"> <meta content="http://static.tutsplus.com/assets/favicon-8b86ba48e7f31535461f183680fe2ac9.png" property="og:image">

Pinterest uses the same protocol as Facebook, OpenGraph, so their sharing works much the same.

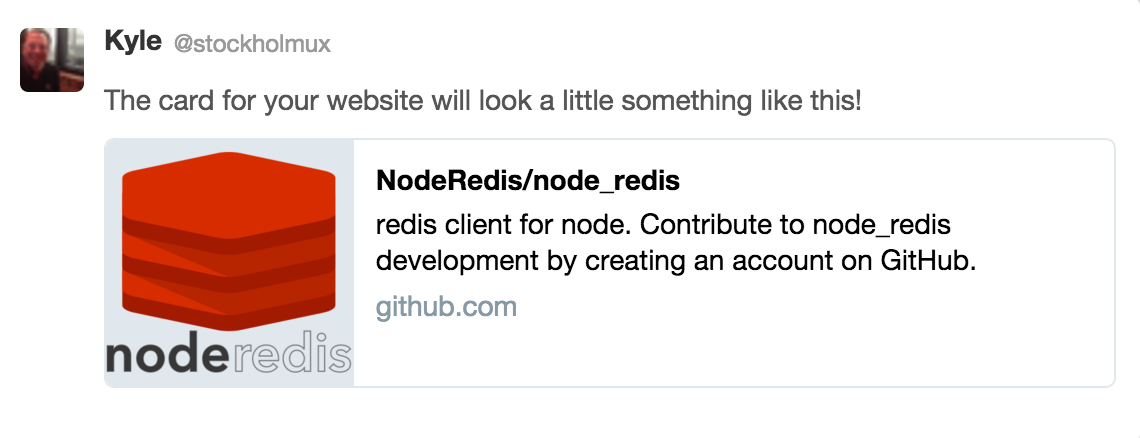

On Twitter, the concept is called a “card”, and Twitter has a few different varieties depending on how you want to present your content. Here is an example of a Twitter card from GitHub:

And here is the HTML that generates this card:

<meta content="@github" name="twitter:site"> <meta content="summary" name="twitter:card"> <meta content="NodeRedis/node_redis" name="twitter:title"> <meta content="redis client for node. Contribute to node_redis development by creating an account on GitHub." name="twitter:description"> <meta content="https://avatars1.githubusercontent.com/u/5845577?v=3&s=400" name="twitter:image:src">

Note: GitHub is using a similar technique to what is described in this tutorial. The page HTML is slightly different in the tag with the name attribute set to twitter:description. I had to change the user agent as described later in the article to get the correct meta tag.

The Rub With Client-Side Rendering

Adding the meta tags is not a problem if you want just one title, description or image for the entire site. Just hard-code the values into the head of your HTML document. Likely, however, you are building a site that is much more complex and you want your rich social sharing to vary based on URL (which is probably a wrapper around the HTML5 History API which your framework is manipulating).

The first attempt might be to build your template and add the values to the meta tags as you would with any other content. Since, at this time, the bots that extract this information do not execute JavaScript, you’ll end up with your template tags instead of your intended values when trying to share.

To make the site readable by bots, we’re building a middleware that detects the user agent of the social sharing bots, and then an alternate router that will serve up the correct content to the bots, avoiding using your SPA framework.

The User Agent Middleware

Clients (robots, web crawlers, browsers) send a User Agent (UA) string in the HTTP headers of every request. This is supposed to identify the client software; while web browsers have a huge variety of UA strings, bots tend to be more or less stable. Facebook, Twitter and Pinterest publish the user agent strings of their bots as a courtesy.

In Express, the UA string is contained in the request object as user-agent. I’m using a regular expression to identify the different bots I’m interested in serving alternate content. We’ll contain this in a middleware. Middlewares are like routes, but they don’t need a path or method and they (generally) pass the request on to another middleware or to the routes. In Express, routes and middlewares are sequential, so place this above any other routes in your Express app.

app.use(function(req,res,next) {

var

ua = req.headers['user-agent'];

if (/^(facebookexternalhit)|(Twitterbot)|(Pinterest)/gi.test(ua)) {

console.log(ua,' is a bot');

}

next();

});

The regular expression above looks for “facebookexternalhit”, “Twitterbot” or “Pinterest” at the start of the UA string. If it exists, it will log the UA to the console.

Here is the whole server:

var

express = require('express'),

app = express(),

server;

app.use(function(req,res,next) {

var

ua = req.headers['user-agent'];

if (/^(facebookexternalhit)|(Twitterbot)|(Pinterest)/gi.test(ua)) {

console.log(ua,' is a bot');

}

next();

});

app.get('/',function(req,res) {

res.send('Serve SPA');

});

server = app.listen(

8000,

function() {

console.log('Server started.');

}

);

Testing Your Middleware

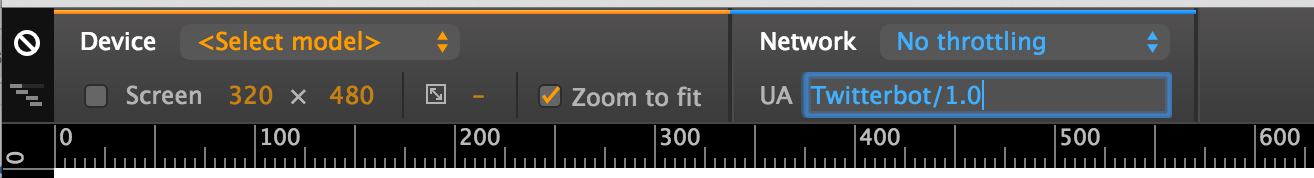

In Chrome, navigate to your new server (which should be http://localhost:8000/). Open DevTools and turn on ‘Device Mode’ by clicking the smartphone icon in the upper left part of the developer pane.

On the device toolbar, put “Twitterbot/1.0” into the UA edit box.

Now, reload the page.

At this point, you should see “Serve SPA” in the page, but looking at the console output of your Express app, you should see:

Twitterbot/1.0 is a bot

Alternate Routing

Now that we can identify bots, let’s build an alternate router. Express can use multiple routers, often used to partition routes out by paths. In this case, we’re going to use a router in a slightly different way. Routers are essentially middlewares, so they except req, res, and next, just like any other middleware. The idea here is to generate a different set of routes that has the same paths.

nonSPArouter = express.Router();

nonSPArouter.get('/', function(req,res) {

res.send('Serve regular HTML with metatags');

});

Our middleware needs to be changed as well. Instead of just logging that the client is a bot, we will now send the request to the new router and, importantly, only pass it along with next() if the UA test fails. So, in short, bots get one router and everyone else gets the standard router that serves the SPA code.

var

express = require('express'),

app = express(),

nonSPArouter

= express.Router(),

server;

nonSPArouter.get('/', function(req,res) {

res.send('Serve regular HTML with metatags');

});

app.use(function(req,res,next) {

var

ua = req.headers['user-agent'];

if (/^(facebookexternalhit)|(Twitterbot)|(Pinterest)/gi.test(ua)) {

console.log(ua,' is a bot');

nonSPArouter(req,res,next);

} else {

next();

}

});

app.get('/',function(req,res) {

res.send('Serve SPA');

});

server = app.listen(

8000,

function() {

console.log('Server started.');

}

);

If we test using the same routine as above, setting the UA to Twitterbot/1.0 the browser will show on reload:

Serve regular HTML with metatags

While with the standard Chrome UA you’ll get:

Serve SPA

Meta Tags

As we examined above, rich social sharing relies on meta tags inside the head of your HTML document. Since you’re building a SPA, you may not even have a templating engine installed. For this tutorial, we’ll use jade. Jade is a fairly simple tempting language where spaces and tabs are relevant and closing tags are not needed. We can install it by running:

npm install jade

In our server source code, add this line before your app.listen.

app.set('view engine', 'jade');

Now, we’re going to input the information we want to serve to the bot only. We’ll modify the nonSPArouter. Since we’ve set up the view engine in app set, res.render will do the jade rendering.

Let’s set up a small jade template to serve to the social sharing bots:

doctype html

html

head

title= title

meta(property="og:url" name="twitter:url" content= url)

meta(property="og:title" name="twitter:title" content= title)

meta(property="og:description" name="twitter:description" content= descriptionText)

meta(property="og:image" content= imageUrl)

meta(property="og:type" content="article")

meta(name="twitter:card" content="summary")

body

h1= title

img(src= img alt= title)

p= descriptionText

Most of this template is the meta tags, but you can also see that I included the information in the body of the document. As of the writing of this tutorial, none of the social sharing bots seem to actually look at anything else beyond the meta tags, but it’s good practice to include the information in a somewhat human readable way, should any sort of human checking be implemented at a later date.

Save the template to your app’s view directory and name it bot.jade. The filename without an extension (‘bot’) will be the first argument of the res.render function.

While it’s always a good idea to develop locally, you’re going to need to expose your app in its final location to fully debug your meta tags. A deployable version of our tiny server looks like this:

var

express = require('express'),

app = express(),

nonSPArouter

= express.Router(),

server;

nonSPArouter.get('/', function(req,res) {

var

img = 'placeholder.png';

res.render('bot', {

img : img,

url : 'https://bot-social-share.herokuapp.com/',

title : 'Bot Test',

descriptionText

: 'This is designed to appeal to bots',

imageUrl : 'https://bot-social-share.herokuapp.com/'+img

});

});

app.use(function(req,res,next) {

var

ua = req.headers['user-agent'];

if (/^(facebookexternalhit)|(Twitterbot)|(Pinterest)/gi.test(ua)) {

console.log(ua,' is a bot');

nonSPArouter(req,res,next);

} else {

next();

}

});

app.get('/',function(req,res) {

res.send('Serve SPA');

});

app.use('/',express.static(__dirname + '/static'));

app.set('view engine', 'jade');

server = app.listen(

process.env.PORT || 8000,

function() {

console.log('Server started.');

}

);

Also note that I’m using the express.static middleware to serve the image from the /static directory.

Debugging Your App

Once you’ve deployed your app to somewhere publicly accessible, you should verify that your meta tags are working as desired.

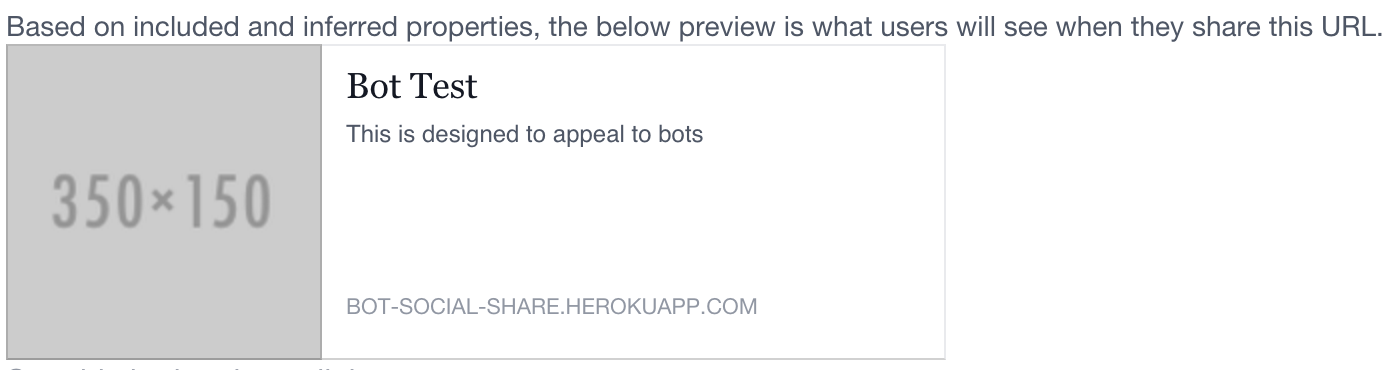

First up, you can test with the Facebook Debugger. Input your URL and click Fetch new scrape information.

You should see something like:

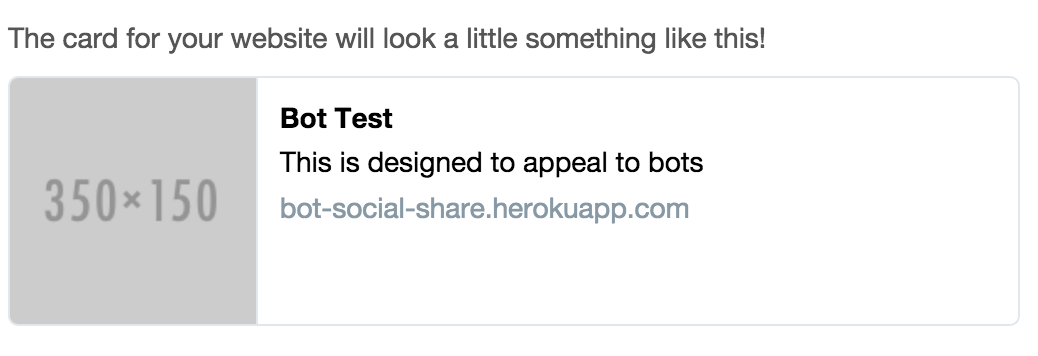

Next, you can move on to testing your Twitter card with the Twitter Card Validator. For this one, you need to be logged in with your Twitter account.

Pinterest provides a debugger, but this example will not work out-of-the-box because Pinterest only allows “rich pins” on URLs other than your homepage.

Next Steps

In your actual implementation, you’ll need to handle integrating your data source and routing. It’s best to look at the routes specified in your SPA code and create an alternate version for everything you think may be shared. After you’ve established the routes that will be likely to be shared, set up meta tags in your primary template that function as your fallback in the situation that someone shares a page you didn’t expect.

While Pinterest, Facebook and Twitter represent a large chunk of the social media market, you may have other services that you want to integrate. Some services do publish the name of their social sharing robots, while others may not. To determine the user agent, you can console.log and examine the console output—try this first on a non-production server as you may have a bad time trying to determine the user agent on a busy site. From that point, you can modify the regular expression in our middleware to also catch the new user agent.

Rich social media shares can be a great way to pull people into your fancy single-page-application-based website. By selectively directing robots to machine-readable content, you can provide just the right information to the robots.

Comments