Making an application context-aware is one of the best ways to offer useful services to your users. While there are still multiple ways to do this—including geofences, activity recognition, and other location services—Google has recently released the Awareness API, which allows developers to create apps that intelligently react to the user's real world situation. The Awareness API combines the Places API, Locations API, Activity Recognition, and Nearby API, as well as adding support for headphone state and weather detection.

In this tutorial you will learn about the Awareness API and how to access snapshots of data, as well as how to create listeners (known as fences, taking their name from geofences) for combinations of user conditions that match the goals of your applications. This can be useful for a wide variety of apps, such as location-based games, offering coupons to users in stores, and starting a music app when you detect a user exercising. All code for this sample application can be found on GitHub.

1. Setting Up the Developer Console

Before diving into your Android application, you will need to set up Google Play Services through the Google API Console. If you already have a project created, you can skip the first step of this section. If not, you can click the above link and follow along to create a new project for your application.

Step 1: Creating a Project

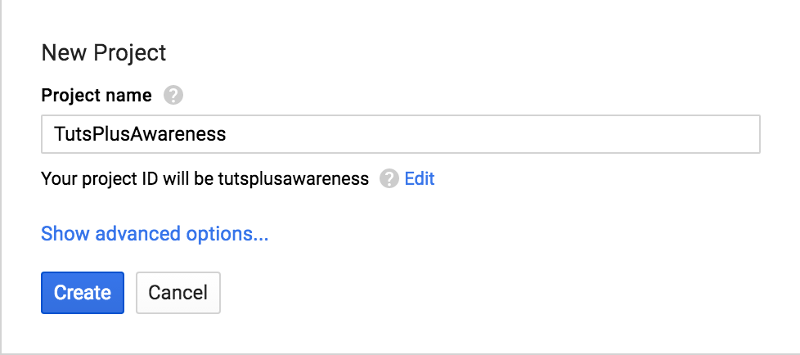

To create a new project, click on the blue Create Project button in the top center of the screen.

This presents you with a dialog that asks for a project name. For this tutorial, I have created a project called TutsPlusAwareness. There are some restrictions on what you can name your project as only letters, numbers, quotes, hyphens, spaces, and exclamation points are allowed characters.

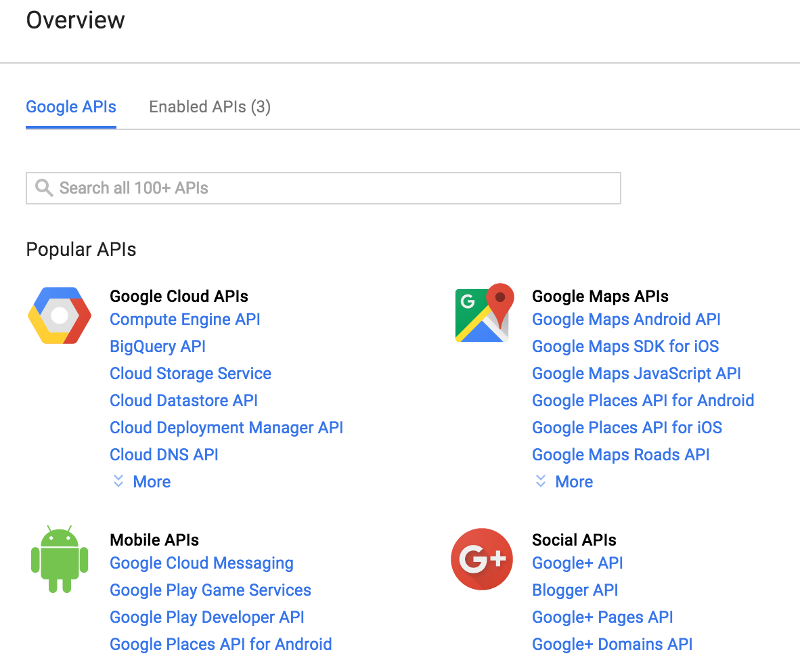

Once you hit Create, a dialog appears in the lower right corner of the page indicating that the project is being created. Once it has disappeared, your project will be available for setting up. You should see a screen similar to the following. If not, click on the Google APIs logo in the top left corner to be taken to the API manager screen.

Step 2: Enabling the Necessary API

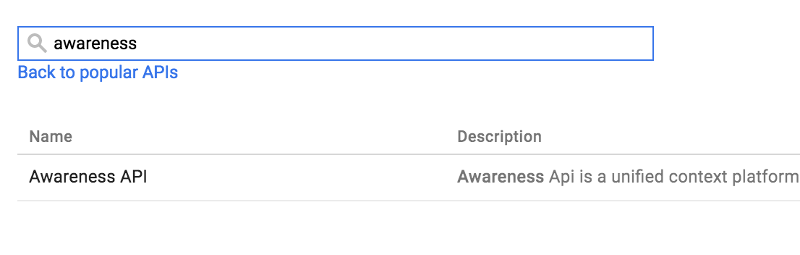

From the Google APIs Overview screen, select the search box and search for the Awareness API.

Once you have selected Awareness API from the returned results, click on the blue Enable button to allow your app to use the Awareness API. If this is the first API you have enabled, you will be prompted to create a set of credentials. Continue to the Credentials page for step 3 of this tutorial.

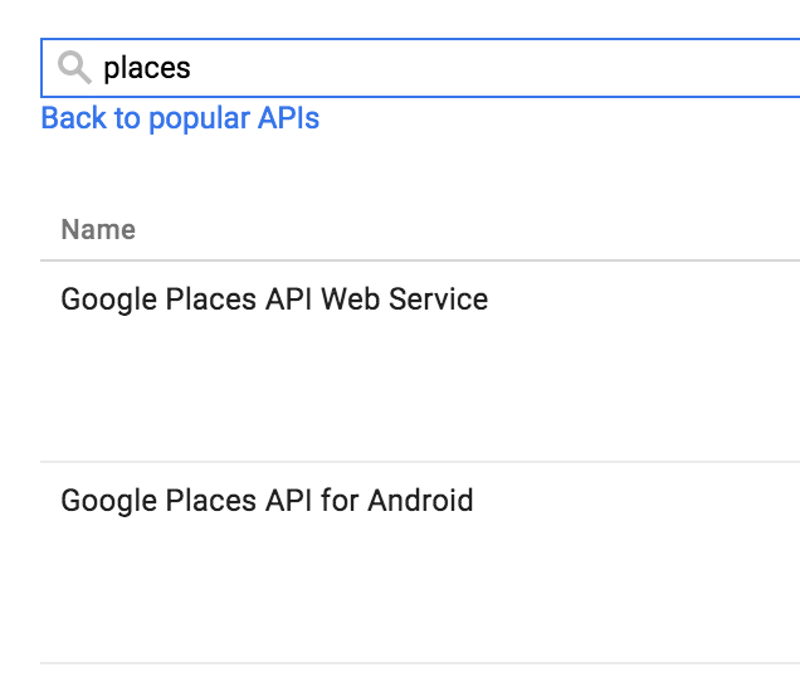

In addition to the Awareness API, there are additional APIs that you may need to enable. If your app uses the Places functionality of the Awareness API, you will need to enable Google Places API for Android.

If your app uses beacons, you will also need to enable the Nearby Messages API.

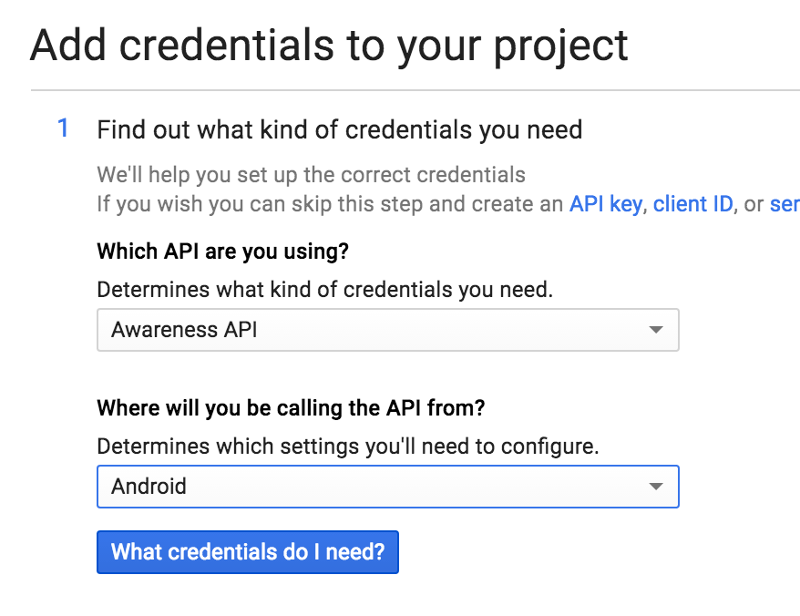

Step 3: Creating An Android API Key

In order to use the enabled APIs, you will need to generate an API key for your Android app. On the credentials page for your Google project, select Awareness API from the top dropdown menu and Android from the second.

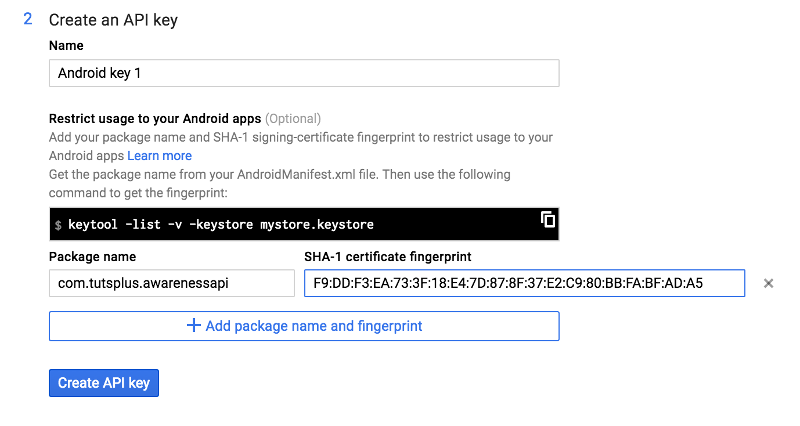

Next you will be taken to a screen where you can enter a package name for your app and the SHA1 certificate for the app's signing key. In order to get the signing key for your debug key on Linux or OS X, enter the following command in a terminal window.

keytool -list -v -keystore ~/.android/debug.keystore -alias androiddebugkey -storepass android -keypass android

On Windows, you can run the same command with the path set to the location of your debug.keystore file.

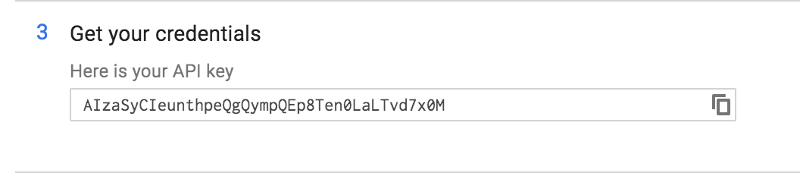

Once you click the Create API key button, you will be given the API key that you will need to use in your Android application.

2. Setting Up the Android Project

Once you have an API key created and the proper APIs enabled, it's time to start setting up your Android project. For this tutorial, we'll create a test application in order to learn the API.

In order to demonstrate all of the features of the Awareness API, this tutorial will focus on using a simple list representing each feature used. Although the details of creating this list will not be discussed, you can find a complete implementation in the GitHub source for this tutorial.

Step 1: Set Up Play Services

First you will need to include the Play Services library in your build.gradle file. While you can include all of Google Play Services, it's best to include only the packages you need for your app.

In this case, the Awareness API is available in the ContextManager package, and it can be included in your project by adding the following line within your dependencies node. You will also want to make sure the AppCompat library is included, as this will be used for checking permissions on Marshmallow and above devices.

compile 'com.google.android.gms:play-services-contextmanager:9.2.0' compile 'com.android.support:appcompat-v7:23.4.0'

Once you have added the above line, sync your project and open the strings.xml file for your project. You will want to place your API key from the Google API Console into a string value.

<string name="google_play_services_key">YOUR API KEY HERE</string>

After you have added your API key, you will need to open your project's AndroidManifest.xml file. Depending on what features of the Awareness API you use, you will need to include permissions for your app. If your app uses the beacon, location, places or weather functionality of the Awareness API, then you will need to include the ACCESS_FINE_LOCATION permission. If you need to use the activity recognition functionality, then you will require the ACTIVITY_RECOGNITION permission.

<uses-permission android:name="android.permission.ACCESS_FINE_LOCATION" /> <uses-permission android:name="com.google.android.gms.permission.ACTIVITY_RECOGNITION" />

Next you will need to declare meta-data within the application node that ties your app to the Google API services. Depending on what your app uses, you will need to also include the com.google.android.geo and com.google.android.nearby metadata for using location, beacons and places features.

<meta-data

android:name="com.google.android.awareness.API_KEY"

android:value="@string/google_play_services_key" />

<!-- places/location declaration -->

<meta-data

android:name="com.google.android.geo.API_KEY"

android:value="@string/google_play_services_key" />

<!-- Beacon snapshots/fences declaration -->

<meta-data

android:name="com.google.android.nearby.messages.API_KEY"

android:value="@string/google_play_services_key" />

Next you will need to open your MainActivity.java file. Add the GoogleApiClient.OnConnectionFailedListener interface to your class and connect to Google Play Services and the Awareness API in your onCreate(Bundle) method.

public class MainActivity extends AppCompatActivity implements

GoogleApiClient.OnConnectionFailedListener {

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_main);

mGoogleApiClient = new GoogleApiClient.Builder(this)

.addApi(Awareness.API)

.enableAutoManage(this, this)

.build();

mGoogleApiClient.connect();

}

@Override

public void onConnectionFailed(@NonNull ConnectionResult connectionResult) {}

}

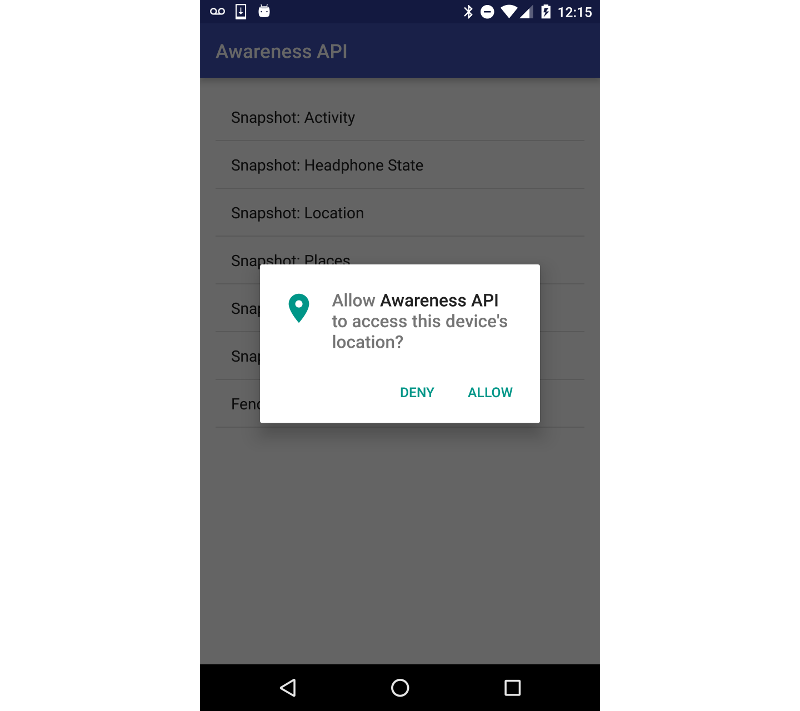

Step 2: Permissions

Now that Play Services are configured within your Android app, you will need to ensure that your users on Android Marshmallow or higher have granted permission for your application to use their location. You can check for this permission in onCreate(Bundle) and before you access any features that require the location permission in order to avoid crashes within your app.

private boolean checkLocationPermission() {

if( !hasLocationPermission() ) {

Log.e("Tuts+", "Does not have location permission granted");

requestLocationPermission();

return false;

}

return true;

}

private boolean hasLocationPermission() {

return ContextCompat.checkSelfPermission( this, Manifest.permission.ACCESS_FINE_LOCATION )

== PackageManager.PERMISSION_GRANTED;

}

If the location permission has not already been granted, you can then request that the user grant it.

private final static int REQUEST_PERMISSION_RESULT_CODE = 42;

private void requestLocationPermission() {

ActivityCompat.requestPermissions(

MainActivity.this,

new String[]{ Manifest.permission.ACCESS_FINE_LOCATION },

REQUEST_PERMISSION_RESULT_CODE );

}

This will cause a system dialog to appear asking the user if they would like to grant permission to your app to know their location.

When the user has responded, the onRequestPermissionsResult() callback will receive the results, and your app can respond accordingly.

@Override

public void onRequestPermissionsResult(int requestCode, @NonNull String permissions[],

@NonNull int[] grantResults) {

switch (requestCode) {

case REQUEST_PERMISSION_RESULT_CODE: {

// If request is cancelled, the result arrays are empty.

if (grantResults.length > 0

&& grantResults[0] == PackageManager.PERMISSION_GRANTED) {

//granted

} else {

Log.e("Tuts+", "Location permission denied.");

}

}

}

}

At this point you should be finished setting up your application for use of the Awareness API.

3. Using the Snapshot API

When you want to gather information about a user's current context, you can use the snapshot functionality of the Awareness API. This API will gather information depending on the type of API call made, and will cache this information for quick access across various apps.

Headphones

One of the new additions to Play Services through the Awareness API is the ability to detect a device's headphone state (plugged in or unplugged). This can be done by calling getHeadphoneState() on the Awareness API and reading the HeadphoneState from the HeadphoneStateResult.

private void detectHeadphones() {

Awareness.SnapshotApi.getHeadphoneState(mGoogleApiClient)

.setResultCallback(new ResultCallback<HeadphoneStateResult>() {

@Override

public void onResult(@NonNull HeadphoneStateResult headphoneStateResult) {

HeadphoneState headphoneState = headphoneStateResult.getHeadphoneState();

if (headphoneState.getState() == HeadphoneState.PLUGGED_IN) {

Log.e("Tuts+", "Headphones are plugged in.");

} else {

Log.e("Tuts+", "Headphones are NOT plugged in.");

}

}

});

}

Once you know the state of the headphones, your app can perform whatever actions are needed based on that information.

Location

Although previously available as a component in Google Play Services, the location feature of the Awareness API has been optimized for efficiency and battery usage. Rather than using the traditional Location API and receiving a location at specified intervals, you can request a one-time location snapshot like so.

private void detectLocation() {

if( !checkLocationPermission() ) {

return;

}

Awareness.SnapshotApi.getLocation(mGoogleApiClient)

.setResultCallback(new ResultCallback<LocationResult>() {

@Override

public void onResult(@NonNull LocationResult locationResult) {

Location location = locationResult.getLocation();

Log.e("Tuts+", "Latitude: " + location.getLatitude() + ", Longitude: " + location.getLongitude());

Log.e("Tuts+", "Provider: " + location.getProvider() + " time: " + location.getTime());

if( location.hasAccuracy() ) {

Log.e("Tuts+", "Accuracy: " + location.getAccuracy());

}

if( location.hasAltitude() ) {

Log.e("Tuts+", "Altitude: " + location.getAltitude());

}

if( location.hasBearing() ) {

Log.e("Tuts+", "Bearing: " + location.getBearing());

}

if( location.hasSpeed() ) {

Log.e("Tuts+", "Speed: " + location.getSpeed());

}

}

});

}

As you can see, you will first need to verify that the user has granted the location permission. If they have, you can retrieve a standard Location object with a large amount of data about the user's location and speed, as well as information on the accuracy of this data.

You will want to verify that a specific piece of information exists before using it, as some data may not be available. Running this code should output all available data to the Android system log.

E/Tuts+: Latitude: 39.9255456, Longitude: -105.02939579999999 E/Tuts+: Provider: Snapshot time: 1468696704662 E/Tuts+: Accuracy: 20.0 E/Tuts+: Altitude: 0.0 E/Tuts+: Speed: 0.0

Places

While not as robust as the standard Places API, the Awareness API does provide a quick and easy to use way to gather information about places near the user. This API call will return a List of PlaceLikelihood objects that contains a Place and a float representing how likely it is that a user is at that place (hence the object's name).

Each Place object may contain a name, address, phone number, place type, user rating, and other useful information. You can request the nearby places for the user after verifying that the user has the location permission granted.

private void detectNearbyPlaces() {

if( !checkLocationPermission() ) {

return;

}

Awareness.SnapshotApi.getPlaces(mGoogleApiClient)

.setResultCallback(new ResultCallback<PlacesResult>() {

@Override

public void onResult(@NonNull PlacesResult placesResult) {

Place place;

for( PlaceLikelihood placeLikelihood : placesResult.getPlaceLikelihoods() ) {

place = placeLikelihood.getPlace();

Log.e("Tuts+", place.getName().toString() + "\n" + place.getAddress().toString() );

Log.e("Tuts+", "Rating: " + place.getRating() );

Log.e("Tuts+", "Likelihood that the user is here: " + placeLikelihood.getLikelihood() * 100 + "%");

}

}

});

}

When running the above method, you should see output similar to the following in the Android console. If a value is not available for a number, -1 will be returned.

E/Tuts+: North Side Tavern

12708 Lowell Blvd, Broomfield, CO 80020, USA

E/Tuts+: Rating: 4.7

E/Tuts+: Likelihood that the user is here: 10.0%

E/Tuts+: Quilt Store

12710 Lowell Blvd, Broomfield, CO 80020, USA

E/Tuts+: Rating: 4.3

E/Tuts+: Likelihood that the user is here: 10.0%

E/Tuts+: Absolute Floor Care

3508 W 126th Pl, Broomfield, CO 80020, USA

E/Tuts+: Rating: -1.0

Weather

Another of the new additions to Google Play Services through the Awareness API is the ability to get the weather conditions for a user. This feature also requires the location permission for users on Marshmallow and later.

Using this request, you will be able to get the temperature in the user's area in either Fahrenheit or Celsius. You can also find out what the temperature feels like, the dew point (the temperature where water in the air can begin to condense into dew), the humidity percentage, and the weather conditions.

private void detectWeather() {

if( !checkLocationPermission() ) {

return;

}

Awareness.SnapshotApi.getWeather(mGoogleApiClient)

.setResultCallback(new ResultCallback<WeatherResult>() {

@Override

public void onResult(@NonNull WeatherResult weatherResult) {

Weather weather = weatherResult.getWeather();

Log.e("Tuts+", "Temp: " + weather.getTemperature(Weather.FAHRENHEIT));

Log.e("Tuts+", "Feels like: " + weather.getFeelsLikeTemperature(Weather.FAHRENHEIT));

Log.e("Tuts+", "Dew point: " + weather.getDewPoint(Weather.FAHRENHEIT));

Log.e("Tuts+", "Humidity: " + weather.getHumidity() );

if( weather.getConditions()[0] == Weather.CONDITION_CLOUDY ) {

Log.e("Tuts+", "Looks like there's some clouds out there");

}

}

});

}

The above code should output something similar to this.

E/Tuts+: Temp: 88.0 E/Tuts+: Feels like: 88.0 E/Tuts+: Dew point: 50.0 E/Tuts+: Humidity: 28 E/Tuts+: Looks like there's some clouds out there

One important thing to note here is that the weather condition value is stored as an int. The entire list of condition values can be found in the Weather object.

int CONDITION_UNKNOWN = 0; int CONDITION_CLEAR = 1; int CONDITION_CLOUDY = 2; int CONDITION_FOGGY = 3; int CONDITION_HAZY = 4; int CONDITION_ICY = 5; int CONDITION_RAINY = 6; int CONDITION_SNOWY = 7; int CONDITION_STORMY = 8; int CONDITION_WINDY = 9;

Activity

Your user's activity will play a large part in how they interact with their device, and detecting that activity will allow you to provide a more fluid user experience.

For example, if you have a fitness app, you may want to detect when a user is running so you can start recording a Google Fit session, or you may want to send a notification to your user if you detect that they have been still for too many hours during the day.

Using the getDetectedActivity() call in the Awareness API, you can get a list of probable activities and how long the user has been doing each one.

private void detectActivity() {

Awareness.SnapshotApi.getDetectedActivity(mGoogleApiClient)

.setResultCallback(new ResultCallback<DetectedActivityResult>() {

@Override

public void onResult(@NonNull DetectedActivityResult detectedActivityResult) {

ActivityRecognitionResult result = detectedActivityResult.getActivityRecognitionResult();

Log.e("Tuts+", "time: " + result.getTime());

Log.e("Tuts+", "elapsed time: " + result.getElapsedRealtimeMillis());

Log.e("Tuts+", "Most likely activity: " + result.getMostProbableActivity().toString());

for( DetectedActivity activity : result.getProbableActivities() ) {

Log.e("Tuts+", "Activity: " + activity.getType() + " Likelihood: " + activity.getConfidence() );

}

}

});

}

The above method will display the most likely activity for the user, how long they've been in that state, and the list of all possible activities.

E/Tuts+: time: 1468701845962 E/Tuts+: elapsed time: 15693985 E/Tuts+: Most likely activity: DetectedActivity [type=STILL, confidence=100] E/Tuts+: Activity: 3 Likelihood: 100

The DetectedActivity type values can be mapped to the following values:

int IN_VEHICLE = 0; int ON_BICYCLE = 1; int ON_FOOT = 2; int STILL = 3; int UNKNOWN = 4; int TILTING = 5; int WALKING = 7; int RUNNING = 8;

Beacons

The final type of snapshot—and most difficult to set up because it requires a real-world component—involves BLE (Bluetooth Low Energy) beacons. While the Nearby API is beyond the scope of this tutorial, you can initialize beacons for your own Google Services project using Google's Beacon Tools app.

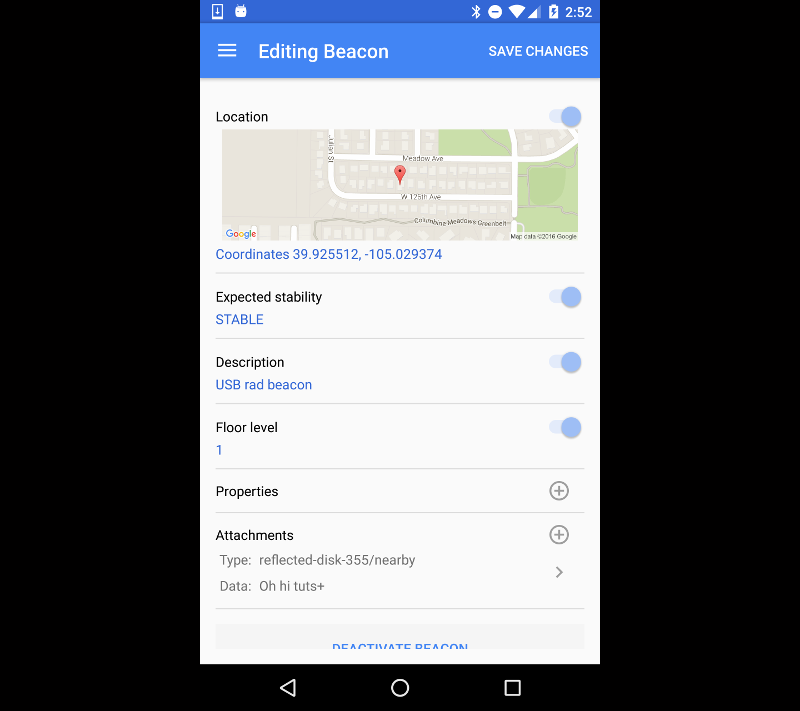

An important thing to note is that once you have registered a beacon to a Google API project, you cannot unregister it without resetting the beacon id. This means if you delete that project, the beacon will need to be reconfigured using your manufacturer's app. For the Awareness API, the namespace must match the Google project that you are using for your Awareness API calls. The above beacon was already registered to a personal test Google project, hence the different namespace (reflected-disk-355) from that of the sample project associated with this tutorial.

In the above screenshot, you can see one item under Attachments. The namespace for this attachment is reflected-disk-355 (this tutorial's example project's namespace is tutsplusawareness) and the type is nearby. You will need this information for your own beacons in order to detect attachments with the Awareness API.

When you have beacons configured, you can return to your code. You will need to create a List of BeaconState.TypeFilter objects so that your app can filter out beacons and attachments that do not relate to your application.

private static final List BEACON_TYPE_FILTERS = Arrays.asList(

BeaconState.TypeFilter.with(

//replace these with your beacon's values

"namespace",

"type") );

If you have reason to believe that your user is near a beacon, you can request attachments from beacons that fit the filter requirements above. This will require the location permission for users on Marshmallow and later.

private void detectBeacons() {

if( !checkLocationPermission() ) {

return;

}

Awareness.SnapshotApi.getBeaconState(mGoogleApiClient, BEACON_TYPE_FILTERS)

.setResultCallback(new ResultCallback<BeaconStateResult>() {

@Override

public void onResult(@NonNull BeaconStateResult beaconStateResult) {

if (!beaconStateResult.getStatus().isSuccess()) {

Log.e("Test", "Could not get beacon state.");

return;

}

BeaconState beaconState = beaconStateResult.getBeaconState();

if( beaconState == null ) {

Log.e("Tuts+", "beacon state is null");

} else {

for(BeaconState.BeaconInfo info : beaconState.getBeaconInfo()) {

Log.e("Tuts+", new String(info.getContent()));

}

}

}

});

}

This code will log out information for the attachment associated with the example beacon above. For this example, I have configured two beacons with the same namespace and type to demonstrate that multiple beacons can be detected at once.

E/Tuts+: Oh hi tuts+ E/Tuts+: Portable Beacon info

4. Using the Fences API

While the Snapshot API can grab information about the user's context at a particular time, the Fences API listens for specific conditions to be met before allowing an action to occur. The Fences API is optimized for efficient battery and data usage, so as to be courteous to your users.

There are five types of conditions that you can check for when creating fences:

- device conditions, such as user having headphones unplugged or plugged in

- location, similar to a standard geofence

- the presence of specific BLE beacons

- user activity, such as running or driving

- time

At this time, weather conditions and places do not have support for fences. You can make a fence that uses any of the supported features; however, a really handy feature of this API is that logical operations can be applied to conditions. You can take multiple fences and use and, or, and not operations to combine the conditions to fit your app's needs.

Create a BroadcastReceiver

Before you create your fence, you will need to have a key value representing each fence that your app will listen for. To finish off this tutorial, you will build a fence that detects when a user is sitting at a set location, such as their home.

private final static String KEY_SITTING_AT_HOME = "sitting_at_home";

Once you have a key defined, you can listen for a broadcast Intent that contains that key.

public class FenceBroadcastReceiver extends BroadcastReceiver {

@Override

public void onReceive(Context context, Intent intent) {

if(TextUtils.equals(ACTION_FENCE, intent.getAction())) {

FenceState fenceState = FenceState.extract(intent);

if( TextUtils.equals(KEY_SITTING_AT_HOME, fenceState.getFenceKey() ) ) {

if( fenceState.getCurrentState() == FenceState.TRUE ) {

Log.e("Tuts+", "You've been sitting at home for too long");

}

}

}

}

}

Create Fences

Now that you have a receiver created to listen for user events, it's time to create your fences. The first AwarenessFence you will create will listen for when the user is in a STILL state.

AwarenessFence activityFence = DetectedActivityFence.during(DetectedActivityFence.STILL);

The second fence you will create will wait for the user to be in range of a specific location. While this sample has values for latitude and longitude already set, you will want to change them to match whichever coordinates match your location for testing.

AwarenessFence homeFence = LocationFence.in(39.92, -105.7, 100000, 1000 );

Now that you have two fences, you can combine them to create a third AwarenessFence by using the AwarenessFence.and operation.

AwarenessFence sittingAtHomeFence = AwarenessFence.and(homeFence, activityFence);

Finally, you can create a PendingIntent that will be broadcast to your BroadcastReceiver and add it to the Awareness API using the updateFences method.

Intent intent = new Intent(ACTION_FENCE); PendingIntent fencePendingIntent = PendingIntent.getBroadcast(this, 0, intent, 0); mFenceBroadcastReceiver = new FenceBroadcastReceiver(); registerReceiver(mFenceBroadcastReceiver, new IntentFilter(ACTION_FENCE)); FenceUpdateRequest.Builder builder = new FenceUpdateRequest.Builder(); builder.addFence(KEY_SITTING_AT_HOME, sittingAtHomeFence, fencePendingIntent); Awareness.FenceApi.updateFences( mGoogleApiClient, builder.build() );

Now, the app will log a message when the user is sitting down within range of the specified location.

Conclusion

In this tutorial, you have learned about the Awareness API and how to gather current information about the user's environment. You have also learned how to register a listener for changes in the user's context and act when specific conditions have been met.

With this information, you should be able to expand your own apps and provide users with more amazing experiences based on their current location, activity, and other useful values.

Comments